Hey there, curious minds! 🤓 Are you ready to unlock the jaw-dropping, game-changing capabilities of GPT-4? Hold on tight, because we’re about to embark on an awe-inspiring journey into the world of AI and Bing’s cutting-edge technology! In this article, we’ll dive deep into the revolutionary fusion of GPT-4’s multimodal abilities and Bing AI, a combination that’s set to take the AI landscape by storm. 🌪️

Introduction

You might be familiar with GPT-3’s language prowess, but GPT-4 takes it to a whole new level. Picture this: an AI model that can not only comprehend text but also understand images, videos, and audio. Yes, you heard it right! GPT-4 is a true multimodal wizard, capable of processing and generating information from various types of data. 📚🖼️🎵

And what’s the secret sauce that magnifies GPT-4’s capabilities? Enter Bing AI, the brilliant technology from Microsoft that seamlessly integrates with GPT-4 to create a harmonious symphony of AI brilliance. Together, they’re like a dynamic duo, combining their superpowers to bring you an unparalleled AI experience. 🤝

Just imagine the possibilities! From analyzing images and videos to generating natural language responses, GPT-4’s multimodal capability powered by Bing AI opens up a whole new world of applications. Whether you’re working on research, content creation, or simply exploring the realms of AI-driven creativity, this fusion will leave you in awe. 💡

In this article, we’ll guide you through the ins and outs of tapping into GPT-4’s multimodal potential using Bing AI. We’ll walk you through the process step-by-step, so you can effortlessly harness this groundbreaking technology and witness its transformative impact. 🚀 So, fasten your seatbelts and get ready to explore the frontier of AI innovation as we unlock the true power of GPT-4 with Bing AI. Let’s dive in! 🏊♀️🎉

What is Bing Chat’s Multimodal Capability?

In simple terms, multimodal means Bing Chat can now understand both text AND images. When you chat with Bing, you can send it a photo or screenshot, and it’ll appreciate the visual content and incorporate it into its response.

This is possible thanks to Microsoft’s integration of OpenAI’s latest natural language model, GPT-4, into Bing Chat. GPT-4 is the most advanced AI system today, with the ability to understand and generate human-like text. Now with multimodality added, it can make sense of images too!

For example, you could send Bing a screenshot of a software error message, and it’ll read the text in the image to provide solutions. Or you could share a photo of a rash, and it’ll analyze visual features to suggest possible diagnoses. Pretty amazing right?

Step 1: Access Bing Chat

First, you’ll need access to the new Bing Chat experience. Here’s how to get it:

- On mobile, update your Bing app to the latest version on iOS or Android.

- On the desktop, ensure you’re using the Bing website (visit) in a supported browser like Edge.

Then look for the new chat icon in the top right and click to open the chat interface. Log in if prompted.

A quick tip: If you don’t see the chat icon yet, try opening a new incognito/private browsing window. The rollout is still ongoing.

Step 2: Chat with Bing Like a Normal

Once you’re in Bing Chat, start conversing with it like you usually would.

Ask questions, request information, or have natural conversations. Bing will respond conversationally with text responses.

Pro tip: The more conversational you are, the better the experience! Bing is designed to chat like a human.

Step 3: Send an Image to Bing

This is where the magic happens! When you’re ready to engage the multimodal capability, send Bing an image in the chat.

On mobile, tap the attach button and select a photo from your camera roll. On the desktop, drag and drop an image into the chat box.

The image can be any visual content – screenshots, photos, diagrams, etc. Make sure it’s relevant to the conversational context.

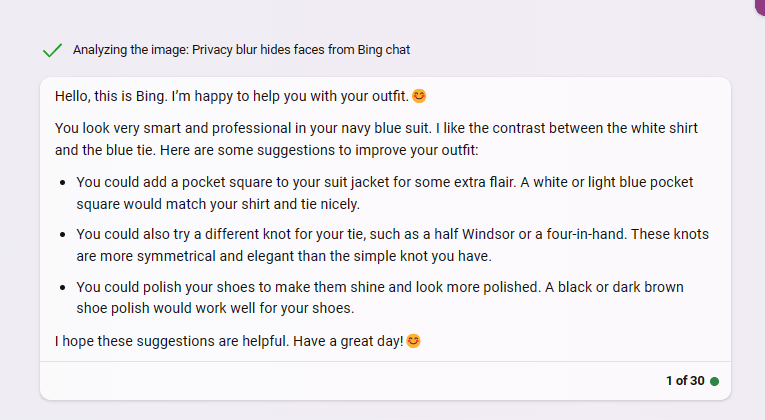

Step 4: See Bing’s Multimodal Response

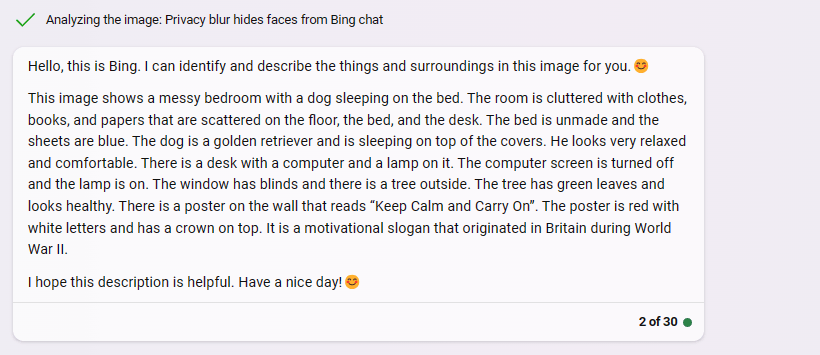

Now watch the magic! After you send a picture, Bing will take a few seconds to analyze the visual content using GPT-4’s multimodal capabilities.

It’ll then generate a text response incorporating details and context from the image. This leads to more relevant, helpful answers compared to just text input.

For example, ask about a software issue and send a screenshot of an error message. In that case, Bing might explain what’s causing the error based on reading the image text. Pretty neat!

Creative Ways to Use Multimodal Bing Chat

Now that you know how to access multimodal Bing Chat, here are some creative ways to take full advantage of its visual capabilities:

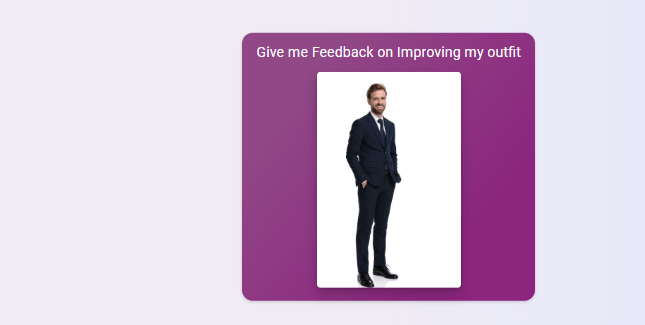

Get detailed fashion advice

Send a full-body photo of your outfit and ask Bing for feedback on improving it. It can analyze colors, patterns, fit, and style to recommend clothing.

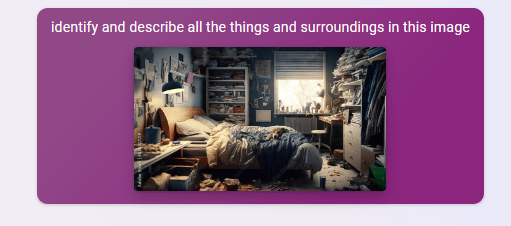

Identify objects in complex scenes

Take a landscape photo or snapshot of a cluttered space. Have Bing identify and describe all the things and surroundings. It’s like having supercomputer vision!

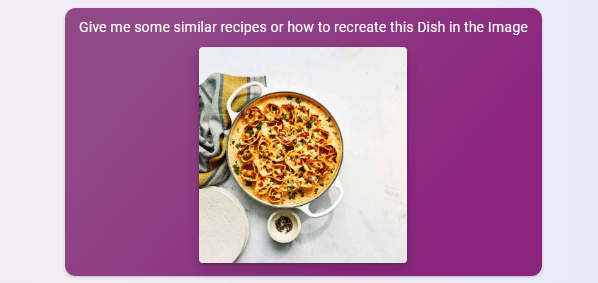

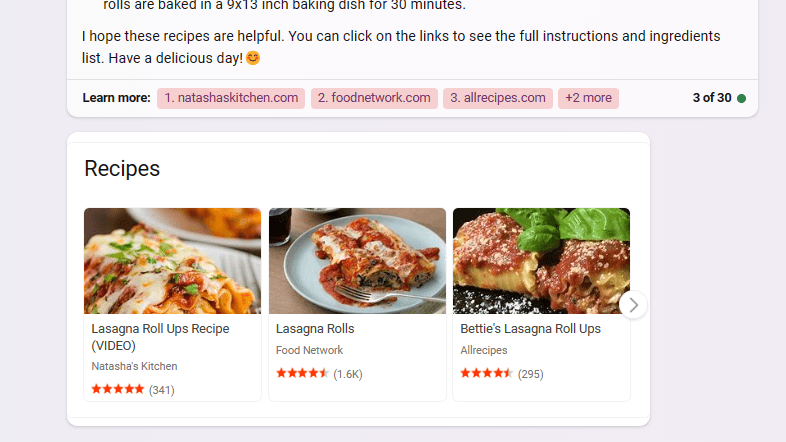

Get recipe help for complex dishes

Instead of just ingredients, share photos of finished dishes and ask Bing for similar recipes or how to recreate them. Great for figuring out restaurant meals!

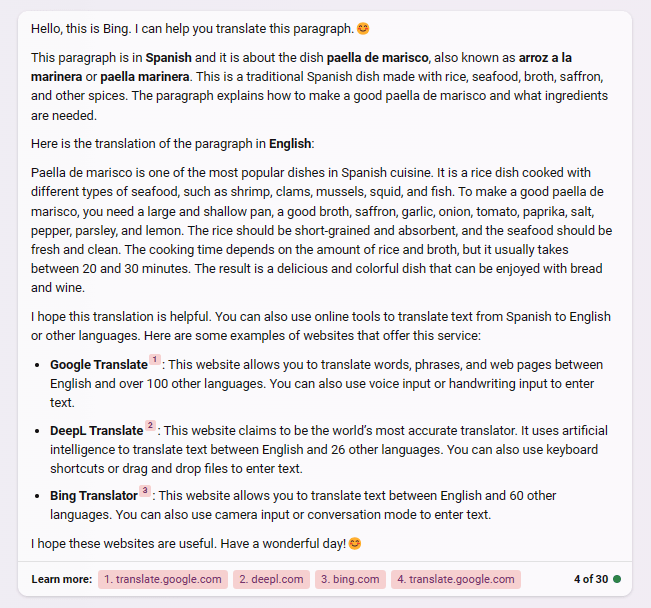

Translate long text passages

Take multiple pictures to capture entire paragraphs or pages in another language. Bing can scan through all of them and provide translations.

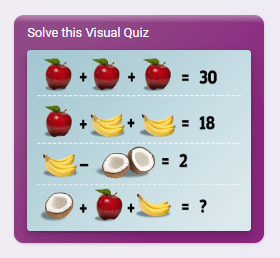

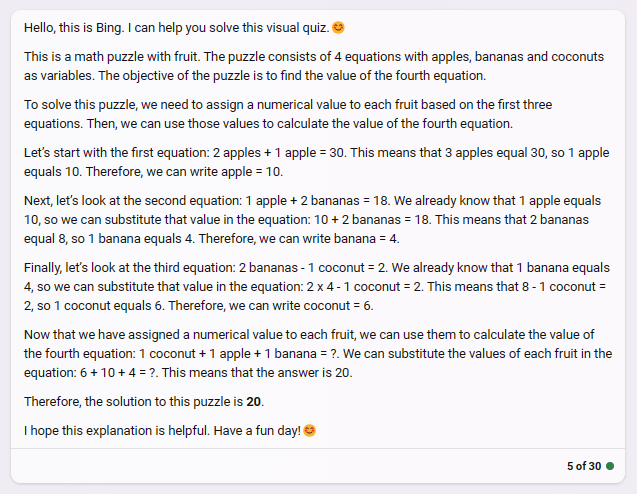

Ace visual quizzes and tests

Take a picture of each quiz question and send the entire test to Bing in images. It can solve puzzles, diagrams, and visual cues and give you the answers!

Triage health issues

Send photos of medical problems like rashes or injuries and ask Bing to provide possible diagnoses and advice based on visual inspection.

The possibilities are endless! Be creative and have fun with multimodal Bing Chat. It’s a powerful tool when you entirely use visual capabilities.

Key Takeaways

And there you have it – a quick guide to using the excellent new multimodal capabilities in Bing Chat! Here are the key takeaways:

- Bing Chat can now understand images thanks to the integration of GPT-4.

- Access the new chat experience on mobile/desktop and start conversing.

- Send Bing relevant images to activate multimodal responses.

- Bing will analyze the visuals using AI and give better, contextual answers.

- Get creative with fashion advice, object identification, recipe help, translations, studying, quizzes, health issues, and more!

So what are you waiting for? Start chatting with the new visual Bing today and see what excellent responses you get! Feel free to reach out if you have any other questions. Happy multimodal chatting!

Frequently Asked Questions – FAQs

What is Bing Chat’s Multimodal Capability?

Bing Chat’s Multimodal Capability refers to its ability to understand both text and images, making conversations more immersive and insightful.

How does GPT-4 enable image understanding in Bing Chat?

GPT-4, integrated into Bing Chat by Microsoft, is an advanced AI system that can process both text and images, allowing Bing Chat to analyze and respond to visual content.

Can I use Bing Chat’s Multimodal Capability on mobile and desktop?

Yes, you can access Bing Chat’s Multimodal Capability on both mobile (iOS and Android) and desktop platforms through the Bing website.

What kinds of images can I send to Bing Chat?

You can send various types of images, including screenshots, photos, diagrams, and more, as long as they are relevant to the context of your conversation.

How long does it take for Bing to respond to an image?

After sending an image, Bing takes a few seconds to analyze it using GPT-4’s capabilities before generating a contextually relevant response.

What are some creative ways to utilize multimodal Bing Chat?

You can seek fashion advice, identify objects in complex scenes, get recipe help, translate text passages, ace visual quizzes, and even get health issue triage using Bing Chat’s visual capabilities. Let your imagination run wild!